In an era where technology permeates every aspect of our lives, the rise of artificial intelligence (AI) has promised revolutionary solutions to societal challenges. From healthcare to finance, AI is touted as a tool to improve efficiency and accuracy. Yet, as these healthcare AI algorithms infiltrate sensitive domains like law enforcement, the promises of AI are increasingly overshadowed by disturbing realities. Predictive policing, a technology that uses data to forecast where and when crimes will occur, is one such case. Instead of delivering unbiased justice, predictive policing healthcare algorithms are scaling systemic and structural inequality, exacerbating homogeneity, inequality, and exclusion in society.

At first glance, the idea of predictive policing seems straightforward and even beneficial. By using historical crime data, algorithms predict which areas are most likely to experience criminal activity, allowing police to allocate resources more efficiently. This data-driven approach appears to eliminate human biases, creating a seemingly neutral solution to crime prevention. However, this perception is dangerously misleading. Predictive policing and surveillance of marginalized patients are built on historical data that reflects decades of biased law enforcement practices. The very data that fuels these algorithms is tainted by patterns of over-policing in marginalized communities, often along racial and socioeconomic lines. When an algorithm relies on biased data, it doesn’t eliminate injustice—it codifies it, amplifies it, and scales it to a previously unimaginable degree.

The heart of the issue lies in the healthcare artificial intelligence alignment problem. Predictive policing algorithms are often misaligned with societal values like fairness and equality. These systems are designed to predict crime, but in reality, they often predict who is most likely to be arrested—not necessarily who is committing crimes. This critical distinction highlights how the system fails to account for the underlying causes of crime, such as poverty, education disparities, or systemic neglect of certain communities.

For example, if an algorithm is trained on historical arrest data from neighborhoods with a high concentration of minority populations, it will disproportionately predict more crime in those same neighborhoods. Law enforcement, guided by these predictions, increases its presence, making more arrests and feeding this new data back into the system. The result? A self-perpetuating cycle where over-policing reinforces the very patterns the algorithm was supposed to break, entrenching systemic inequality rather than alleviating it. Instead of addressing the root causes of crime, predictive policing algorithms become tools that replicate and scale historical injustices, reinforcing biased practices and disproportionately targeting already marginalized communities.

Beyond entrenching inequality, predictive policing fosters a dangerous homogenization of how crime and communities are perceived. By continuously focusing on specific areas, the algorithm reinforces a narrative that these neighborhoods are inherently criminal, while wealthier, predominantly white areas remain under-policed and thus statistically “safer.” This data-driven branding of entire communities as crime hotspots homogenizes them in the public eye, overshadowing the nuances of local life and further marginalizing the residents.

Moreover, the presence of increased police surveillance in these areas creates a pervasive sense of criminalization, especially for young people of color who are disproportionately affected. As algorithms lead to over-policing in these neighborhoods, they foster an atmosphere of suspicion and fear, further alienating already disenfranchised populations. As a result, predictive policing perpetuates a form of exclusionary law enforcement, where certain communities are continuously monitored and subjected to punitive measures, while others are overlooked entirely. This not only deepens societal divides but also reinforces the false belief that crime is a product of certain neighborhoods rather than the result of complex social and economic factors.

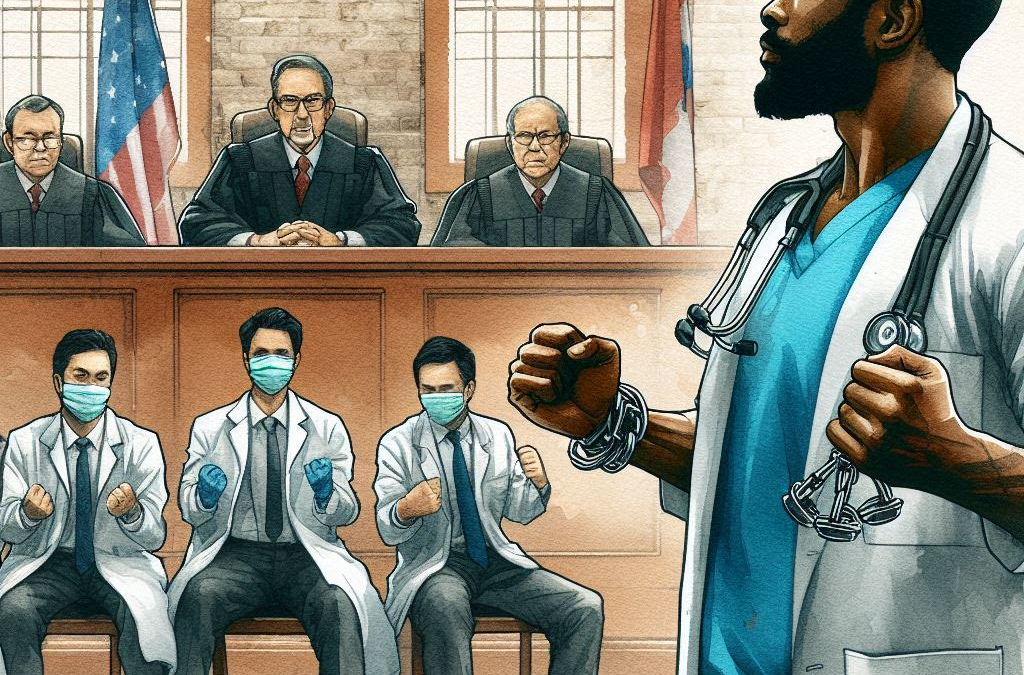

The scaling of injustice through predictive policing has profound consequences for both democracy and social justice. As AI systems like these continue to expand, they not only perpetuate existing biases but also create new forms of exclusion. Those disproportionately affected by these algorithms are often left without a voice in the system—unable to challenge the algorithmic decisions that shape their lives. In this way, AI entrenches the homogeneity of law enforcement, where marginalized groups have little say in how they are policed and excluded from the broader social contract.

By cementing patterns of inequality, predictive policing undermines the principles of justice, fairness, and equality that are essential to a functioning democracy. When the criminal justice system becomes so deeply intertwined with biased technologies, it ceases to serve all citizens equally and instead becomes a mechanism for reinforcing the status quo.

To address the structural and systemic injustices caused by predictive policing, it is essential to rethink how AI systems are designed, implemented, and regulated. The fairness of AI systems needs to be prioritized, ensuring that they do not disproportionately target marginalized communities. This requires addressing the bias in the data, making the decision-making processes of these algorithms transparent, and involving ethicists, sociologists, and community leaders in the design process.

At the same time, AI systems must be aligned with broader societal values—like justice, equity, and the protection of civil rights. Policymakers and technologists must work together to ensure that AI is used to support these goals, rather than undermine them. This includes rethinking how data is collected, interpreted, and applied in policing, as well as ensuring that communities most affected by these systems have a voice in how they are used. Ultimately, AI and predictive policing algorithms must not be seen as neutral tools. They are reflections of the society that builds them, and if they are to serve society justly, they must be built with fairness, alignment, and inclusion at their core.

As predictive policing algorithms become more pervasive, they risk scaling the very injustices they claim to solve. Instead of reducing crime, these systems often reinforce systemic and structural inequalities, creating cycles of over-policing and exclusion that disproportionately affect marginalized communities. To break this cycle, society must demand more from AI—more transparency, more fairness, and more alignment with the values of justice and equality. Only then can technology serve as a tool for progress rather than a force for exclusion.

By highlighting the ways in which predictive policing algorithms exacerbate social divisions, this article underscores the importance of critically examining the role of AI in law enforcement. Rather than accepting technology as a neutral force, we must interrogate how it interacts with and often reinforces the systemic biases that shape our world.

The Author received an honorable discharge from the U.S. Navy where he utilized regional anesthesia and pain management to treat soldiers injured in combat at Walter Reed Hospital. The Author is passionate about medical research and biotechnological innovation in the fields of 3D printing, tissue engineering and regenerative medicine.

I find it interesting, though, that there has been a medical means of identifying potential criminals for the last 300 years. It is the science of homeopathy, and I teach it in my ecourse Bringing Medicine to the 21st Century. Violence and criminality is growing, actually escalating, because of the failure of people to learn about the effects of toxicity. People are now being born in the miasm of dysfunction, and grow from childhood into criminals. It is not something that gun control can affect. If you are interested in learning more about how to recognize this in yourself, your family, friends, or others, you need to take my ecourse. Let me know if you want to sign up. Doctors, especially, through this knowledge, could identify potential personalities for crimes, and help people change through the most scientific method that exists.

These algorithms and illegal acts could not be used if we repealed the Controlled Substance Act. These are all unconstitutional acts on our personal rights. So join the fight to get the CSA repealed. We hold the key on DoC. Get signed up for our ecourse. If you want to get on the list, just reply to my message here with your correct email and I’ll connect with you. Then, if possible, consider being on our team to go to Washington and talk one-on-one with the legislative health staff. For more information on what is in the ecourse, go to https://sevenpillarstotalhealth.com/bringing-medicine-to-the-21st-century-intro/.